In the world of database management systems (DBMS), normalization is key. It ensures data integrity and cuts down on data redundancy. By following certain rules and best practices, designers make databases efficient and easy to maintain. This is crucial for modern applications.

This guide explores database normalization deeply. We’ll look at its purpose, benefits, and the normal forms that lead to a well-structured database. Whether you’re new or experienced, knowing normalization is vital for creating strong, scalable databases.

We’ll cover the basics of normalization, from 1NF to 3NF. We’ll use examples to show how these ideas work in real life. We’ll also talk about the good and bad sides of normalization. And when it’s okay to use denormalization to balance data integrity and speed.

Table of Contents

Key Takeaways

- Normalization is a database design technique that organizes data to minimize redundancy and dependency.

- Applying normal forms helps ensure data integrity and simplifies data maintenance.

- The first normal form (1NF) focuses on atomicity and removing repeating groups.

- The second normal form (2NF) eliminates partial dependencies.

- The third normal form (3NF) removes transitive dependencies and achieves data consistency.

- Denormalization may be necessary in certain scenarios to optimize database performance.

By the end of this guide, you’ll know a lot about normalization in DBMS. You’ll be ready to design efficient, reliable databases that last. Let’s start exploring database normalization!

Introduction to Database Normalization

In the world of database design, making sure data is organized well and consistent is key. This is where database normalization comes in. It’s a way to organize a database so it’s less redundant and more reliable.

If databases aren’t normalized, they can face problems like data anomalies and redundancy. Data anomalies are when data doesn’t match up right. Redundancy means the same data is stored in many places. These issues can make databases less efficient and more prone to errors.

The normalization process breaks down a database into smaller, easier-to-manage tables. It follows rules called normal forms. This helps get rid of data redundancy, keeps data accurate, and makes the database easier to maintain.

In the next parts, we’ll dive into why normalization is important and its benefits. We’ll also look at the different normal forms and how they help create a solid database. By the end, you’ll know how to make your database strong and efficient.

The Purpose and Benefits of Normalization in DBMS

Normalization is key in database management. It ensures data is accurate, consistent, and efficient. It helps designers create a database that’s well-organized and easy to manage. This makes data maintenance simpler and reduces redundancy.

Reducing Data Redundancy

Normalization aims to cut down on duplicate data. Storing data in many places wastes space and can lead to errors. It breaks data into smaller, related tables. This way, each piece of information is stored just once.

Ensuring Data Integrity

Normalization is crucial for keeping data reliable. It makes sure data is accurate and consistent. It uses rules and relationships to prevent errors during updates or changes.

Simplifying Data Maintenance

A normalized database is easier to keep up with. It reduces data redundancy and ensures consistency. This makes updates, insertions, and deletions simpler. It saves time and effort in the long run.

Understanding the Normal Forms

In database design, normal forms are key to keeping data accurate and reducing redundancy. They offer guidelines and rules for organizing databases. This helps avoid data errors and dependencies. Let’s explore the three main normal forms: 1NF, 2NF, and 3NF.

The first normal form (1NF) emphasizes atomicity. It means each column in a table has only one value. It also removes repeating groups in a single record. Following 1NF sets a solid base for a database.

The second normal form (2NF aims to remove partial dependencies. It ensures every non-key column depends on the whole primary key, not just part of it. Achieving 2NF improves the database structure and reduces data errors.

The third normal form (3NF goes further by eliminating transitive dependencies. Simply put, it makes sure no non-key column depends on another non-key column. Reaching 3NF makes your database highly normalized, reducing data duplication and ensuring data integrity.

When normalizing, it’s crucial to follow the right rules. These rules help you spot and fix data dependencies. This leads to a database that’s efficient, easy to manage, and free from errors.

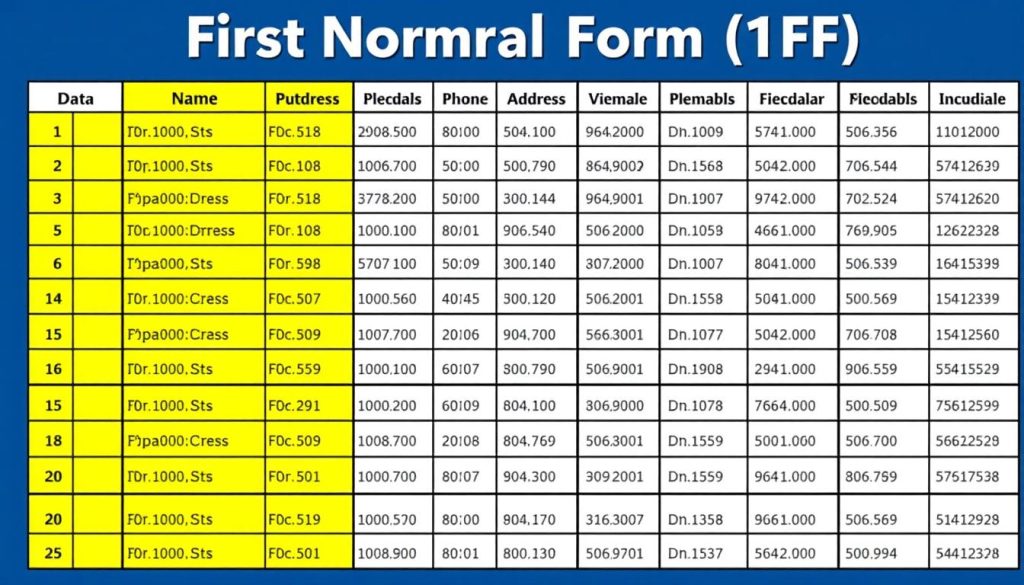

First Normal Form (1NF)

The First Normal Form (1NF) is the first step in making a database better. It focuses on making sure data is in its simplest form and removing any repeating groups. Achieving 1NF helps create a database that is well-organized and works efficiently.

Atomicity and Removing Repeating Groups

In 1NF, each column in a table should have only one piece of data. This means the data can’t be split further. It ensures each cell in the table has just one value, not multiple.

Repeating groups happen when a table has the same information in different columns. For example, a table with “Phone1,” “Phone2,” and “Phone3” columns is not in 1NF. To fix this, we split the repeating data into separate rows. Sometimes, we even need to create a new table.

Examples of 1NF Normalization

Let’s look at an example to show how to make a table 1NF. Imagine we have a “Students” table with these columns:

- StudentID

- Name

- Course1

- Course2

- Course3

To make this table 1NF, we remove the repeating groups (Course1, Course2, Course3). We also make sure each piece of data is atomic. The final 1NF table looks like this:

- StudentID

- Name

- Course

Now, each course is in its own row. The StudentID and Name columns repeat as needed. This makes it easier to work with the data.

By following 1NF, we reduce data duplication, improve data quality, and make the database easier to manage. It prepares the way for more advanced normalization steps to further improve our database.

Second Normal Form (2NF)

After making a table First Normal Form (1NF), we move to Second Normal Form (2NF). The main aim of 2NF is to get rid of partial dependencies. This ensures that all non-key attributes depend on the whole primary key.

Some tables have a composite primary key, made of several attributes. Partial dependencies happen when a non-key attribute relies on just a part of the key, not the whole. This can cause data to be duplicated and not match up.

To reach 2NF, find and fix partial dependencies. You do this by splitting off the dependent attributes into a new table. This new table has the dependent attribute and a part of the key as its primary key. The original table keeps its key and any fully dependent attributes.

Identifying Functional Dependencies

To check if a table is in 2NF, look at the functional dependencies between attributes. A functional dependency is when one attribute’s value decides another’s value. Spotting these helps you find and fix partial dependencies.

After removing all partial dependencies and making sure all non-key attributes depend on the whole key, your database is in 2NF. This makes your data more reliable, reduces duplication, and makes upkeep easier.

Third Normal Form (3NF)

The Third Normal Form (3NF) is a key step in making databases better. It builds on 1NF and 2NF. The main goal is to get rid of transitive dependencies. This makes sure non-key attributes only depend on the primary key, not on other non-key attributes.

By reaching 3NF, you make your database more reliable and consistent. This is crucial for keeping data accurate and up-to-date.

Eliminating Transitive Dependencies

Transitive dependencies happen when a non-key attribute depends on another non-key attribute. This attribute then depends on the primary key. It’s like a chain of dependency.

To get to 3NF, you need to break these chains. This means removing the transitive dependencies.

Let’s say you have a table for employee info. It includes employee ID, name, department, and department location. Here, the department location depends on the department, which depends on the employee ID. To make this table 3NF, you split it into two. One for employees and another for departments.

Achieving Data Integrity and Consistency

3NF helps keep data accurate and consistent. It removes data redundancy and anomalies. This makes it easier to update and manage your database.

With 3NF, you know each non-key attribute depends only on the primary key. This creates a cleaner, more reliable database structure.

Getting to 3NF might mean adding more tables and foreign keys. But the benefits are worth it. You get a database that’s more stable, efficient, and less likely to have data errors.

What Is Normalization in DBMS

Normalization is key in database management systems (DBMS). It helps organize data well. It breaks down complex data into simpler parts to keep data safe, reduce repetition, and make data management easier.

The normalization definition is about organizing data to avoid duplication and dependency problems. It uses rules to make a strong and dependable database design.

Definition and Key Concepts

Normalization makes a relational database better by removing data duplication and improving data integrity. It splits big tables into smaller ones. It also sets up relationships between them based on their connections.

The main ideas of normalization are:

- Getting rid of data duplication

- Making sure data dependencies are right

- Lowering data errors and inconsistencies

- Making data upkeep and updates simpler

The Role of Normalization in Database Design

Normalization is very important in database design. It helps designers make a well-organized and efficient database. This follows best practices and database design principles.

Some big advantages of normalization in database design are:

- It makes data organization and clarity better

- It cuts down data redundancy and storage needs

- It boosts data integrity and consistency

- It makes data relationships simpler and data retrieval easier

- It makes database upkeep and updates simpler

By using normalization in database design, developers can make a strong and growing database. This database manages data well and meets the needs of the application or system it supports.

Advantages and Disadvantages of Normalization

Normalization in database design has many benefits. It helps keep data consistent and accurate. By avoiding data duplication, it ensures each piece of information is true to one source.

This approach also makes data upkeep easier. Changes to data are made in one place, spreading to all parts of the database. This makes managing data smoother and cuts down on errors.

But, normalization has its downsides too. It can make queries more complex. When data is split into many tables, getting information back together can be tough. This can slow down how fast data is retrieved.

Normalization also uses more disk space and can make queries slower. In contrast, denormalized structures might be faster because they need fewer joins. Finding the perfect balance is key in database design.

Ultimately, the decision to normalize a database depends on the specific requirements and priorities of the application. We must consider both the benefits, like data integrity, and the drawbacks, like query complexity. Finding the right balance is essential for a database that works well and efficiently.

Denormalization: When to Break the Rules

Database normalization is key for keeping data right and avoiding too much repetition. But, there are times when you might want to break these rules. Denormalization is when you add some extra data to your database to make it run faster.

Following normalization too closely can make your database slow. Denormalizing it can make queries run faster by needing fewer joins. But, you have to find the right balance to avoid problems with data consistency and upkeep.

Balancing Normalization and Performance

Thinking about denormalization means weighing data integrity against how fast your database runs. Normalization keeps data consistent but can slow things down. Denormalization makes things faster but can lead to data issues if not managed well.

Deciding to denormalize should be based on a deep look at what your application needs. Focus on the data and queries that matter most. This way, you can make your database faster without losing its integrity.

Scenarios for Denormalization

Denormalization is useful in a few common situations:

- Frequently accessed data: Storing data that’s often used together can save time by avoiding complex joins.

- Complex queries: Denormalization can make complex queries easier by pre-calculating data, saving time on calculations.

- Reporting and analytics: For data warehousing and business intelligence, denormalized databases can speed up reports and analysis.

Remember, denormalization should be done thoughtfully and only when it’s really needed. Make sure you have a plan for keeping data consistent and handling updates to denormalized data.

Conclusion

Database normalization is key for creating databases that keep data consistent and reliable. It helps avoid data duplication and ensures data is managed well. This process is crucial for a strong and dependable database system.

We looked at different normal forms in this article. The first normal form (1NF) removes repeating groups. The second normal form (2NF) gets rid of partial dependencies. The third normal form (3NF) deals with transitive dependencies. Each step helps make the database more organized.

Normalization has many advantages, but it’s important to balance it with performance. Sometimes, denormalization is needed to speed up queries. But, it should be used carefully, only when it’s really needed. Remember the normalization principles when designing databases. Aim for databases that are efficient, easy to maintain, and reliable.

FAQ

What is database normalization?

Database normalization organizes data to avoid duplication and ensure accuracy. It breaks down large tables into smaller ones. These smaller tables have specific rules to follow, known as normal forms.

Why is normalization important in database design?

Normalization is key because it reduces data duplication and errors. It makes databases more efficient and easier to manage. This is done by organizing data into structured tables and setting up proper relationships.

What are the different normal forms in database normalization?

The main normal forms are: – First Normal Form (1NF): Makes sure data is in single units and removes repeating groups. – Second Normal Form (2NF): Ensures that non-key data depends on the whole primary key, not just part of it. – Third Normal Form (3NF): Removes any indirect relationships, so data only depends on the primary key.

What are the benefits of normalizing a database?

Normalizing a database offers several advantages: – It reduces data duplication and ensures accuracy. – It makes data easier to maintain and update. – It helps avoid data errors and inconsistencies. – It improves data retrieval and query efficiency.

What is denormalization and when is it used?

Denormalization breaks normalization rules to boost database performance. It combines data from several tables into one to speed up queries. It’s used when quick data access is more important than strict normalization.

How does normalization affect database performance?

Normalization can both help and hinder database performance. It reduces data duplication and ensures accuracy, making storage and maintenance easier. However, it might require more complex queries, affecting speed. Finding the right balance is crucial.

Can a database be overnormalized?

Yes, overnormalization happens when databases are split too much. This leads to too many joins, slowing down queries. It’s important to find a balance that meets data integrity and performance needs.